Containers - What Are They Good For? Crafting our Build Environments

In the last post, I looked at what comes "out of the box" with the Docker support in Visual Studio. While what we saw makes a lot sense for a good "F5" story, it's not that great for an actual build environment.

In a normal build environment, we have not only the components to build and package, but also to run our tests. And for us, tests mean we run not only unit tests, but integration tests that run against a database. Ideally, we'd also test our database migration scripts as well. Combining the two steps means our "build":

- Restores

- Builds

- Migrates the DB

- Runs unit tests

- Runs integration tests against the DB

- Packages

- Publishes

I'm going to leave off the last two steps for now, and focus on the "Continuous Integration" part of our environment:

I want to focus on containers for the continuous integration part. Our big decision here is - what exactly is our build environment? We have a few options:

- Use the "kitchen sink" image from the VSTS team, just using what they've build as our image

- Start from default images and layer on the pieces we need into one single image

- Use Docker Compose to compose multiple images together into a build "environment"

In our normal container-less environment, we run our build on the host machine. We now need to construct a build environment in a container, or multiple containers. Let's look first at constructing a build environment.

Kitchen Sink approach

In this approach, instead of trying to add each dependency on our own, we'll just use exactly what the build server uses. Not all cloud providers have this available, but VSTS lets you run build agents as Docker images. They've got a ton available and we can pick and choose our OS and other requirements.

On top of that, they have two separate flavors of images:

- Base image with only VSTS agent connections

- Kitchen sink image with a ton of dependencies pre-installed

There is a problem here, however. It's Ubuntu-only! We want a Windows image, not *nix one.

There are older VSTS container images, however, they're very old, and not maintained.

Suppose we did go this route, what might it give us? Mainly, the ability to run VSTS agents as containers, and more intriguingly, the ability to scale up and down through container orchestrators. But since it's *nix-only, won't work for us. Kitchen sink, sunk.

Single image built up

Just working with a single image can be a bit nicer. We don't have to worry about composing multiple images together into a network, everything is self-contained. So our first step here is - what should be our starting image? To figure this out, let's see what our image requirements are:

- .NET Core 2.1 SDK

- Powershell 4 (build script)

- Some SQL, maybe SqlLocalDb? SQL Express?

Not too bad, but we see already that we have some tough decisions. Container images are great if everything is already there, but because there's no "apt-get" in Windows, installing dependencies is...painful.

First up, how about .NET Core SDK? Is there a build image available, or do we have to start even more fundamental? Well, we've already used a build image for local development, so we can use that as our starting point.

However, the other two pieces aren't installed. No problem, right? Well, no, because Powershell 4 requires the full .NET Framework, and that's not easy to install via the command line. We could install Powershell Core, which is the cross-platform, .NET Core-based version of Powershell. That's not too bad to install into a container, provided someone else has already figured it out.

My other option of course is to eliminate the Powershell dependency, but this means using just plain build.cmd files as my build script, and those are...ugly.

And for SQL Server, there are images, but maybe we just want SQL Local DB? I couldn't find a way to do this strictly at the command line, but there is a Chocolatey package. However, then I'd need Chocolatey, and Chocolatey's requirements are now more difficult for me, it needs the full .NET Framework. I'm trying to keep things lean and only have .NET Core on my build agent, so again, this is out.

At this point, we're in a bit of a bind with a single image. I can certainly bring all the pieces together, but it seems like my dependencies are...many. Bringing in PowerShell 6, the .NET Core version, seems reasonable. But beyond that, adding the additional dependencies makes my container grow ever larger in size.

So for now, single image is out.

Docker Compose

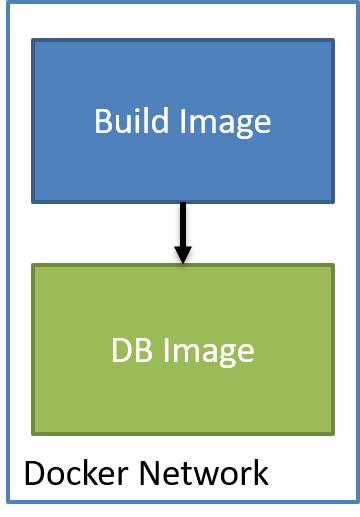

Right now I've been trying to get all my build dependencies on a single image, but what if we built our build "environment" not as a single image, but similar to our actual build environment? What if we built something like this:

Instead of trying to jam everything into one single image, what if we instead focus on two things:

- What is needed to build?

- What is needed to test?

To build, I don't have a lot of dependencies. Really it's just .NET Core SDK, and PowerShell Core to kick things off. In a future release of the Docker .NET Core SDK containers, the team is looking at including PS Core installed - but for now, we can just create an image to do this ourselves.

Right now there isn't a public image that contains both of these dependencies, so we need to decide which is harder:

- Start with PowerShell Core image, add .NET Core

- Start with .NET Core 2.1 SDK image, add PowerShell Core

Both dockerfiles are publicly available, so I don't have to figure out how to install each. All things being equal, I'd really like to go with the smaller base image. However, there's a wrinkle here, in that in order to add either of these, I need something that can download a file from the web. If you look at each dockerfile, they both use the full windowsservercore image, with full .NET, as one of the intermediate, staged images.

We'll see in the next post, but even though images are cached on the host machine, I do want to make sure I use the right cached images. Using an image that isn't cached, when the images are huuuuuuge, can crater a build.

To be honest, neither option is that great, both the SDK and Server Core downloads are large, so let's just flip a coin and say "start with the Powershell Image". With this starting point, let's look at our dockerfile to create our "build" image:

# escape=`

# Installer image

FROM microsoft/powershell:nanoserver-1709 AS installer-env

SHELL ["pwsh", "-Command", "$ErrorActionPreference = 'Stop'; $ProgressPreference = 'SilentlyContinue';"]

# Retrieve .NET Core SDK

ENV DOTNET_SDK_VERSION 2.1.300

RUN Invoke-WebRequest -OutFile dotnet.zip https://dotnetcli.blob.core.windows.net/dotnet/Sdk/$Env:DOTNET_SDK_VERSION/dotnet-sdk-$Env:DOTNET_SDK_VERSION-win-x64.zip; `

$dotnet_sha512 = '4aa6ff6aa51e1d71733944e10fd9e37647a58df7efbc76f432b8c3ffa3f617f9da36f72532175a1e765dbaf4598a14350017342d5f776dfe8e25d5049696d003'; `

if ((Get-FileHash dotnet.zip -Algorithm sha512).Hash -ne $dotnet_sha512) { `

Write-Host 'CHECKSUM VERIFICATION FAILED!'; `

exit 1; `

}; `

`

Expand-Archive dotnet.zip -DestinationPath dotnet; `

Remove-Item -Force dotnet.zip

# SDK image

FROM microsoft/powershell:nanoserver-1709

COPY --from=installer-env ["dotnet", "C:\\Program Files\\dotnet"]

# In order to set system PATH, ContainerAdministrator must be used

USER ContainerAdministrator

RUN setx /M PATH "%PATH%;C:\Program Files\dotnet"

USER ContainerUser

# Configure Kestrel web server to bind to port 80 when present

ENV ASPNETCORE_URLS=http://+:80 `

# Enable detection of running in a container

DOTNET_RUNNING_IN_CONTAINER=true `

# Enable correct mode for dotnet watch (only mode supported in a container)

DOTNET_USE_POLLING_FILE_WATCHER=true `

# Skip extraction of XML docs - generally not useful within an image/container - helps perfomance

NUGET_XMLDOC_MODE=skip

# Trigger first run experience by running arbitrary cmd to populate local package cache

RUN dotnet help

Not that exciting, we're just copying the .NET Core 2.1 SDK dockerfile and using the Powershell Core docker images instead of a plain nanoserver/windowsservercore image.

To make sure I can run this OK, from a command line I'll make sure everything works:

> docker build .\ -t contosouniversity/ci

... lots of messages

> docker run -it contosouniversity/ci pwsh -Command Write-Host "Hello World"

Hello World

> docker run -it contosouniversity/ci pwsh -Command dotnet --version

2.1.300

Good to go!

Now that I have a build image that contains my dependencies, I need to craft a docker-compose file that stitches together my source from the host, the build image, and the database image I'm using in my "local running" environment:

version: '3'

services:

ci:

image: contosouniversity/ci

build:

context: .

dockerfile: ci\Dockerfile

volumes:

- .\:c:\src

working_dir: \src

environment:

- "ConnectionStrings:DefaultConnection=Server=test-db;Database=contosouniversity-test;User Id=sa;Password=Pass@word"

depends_on:

- test-db

test-db:

image: microsoft/mssql-server-windows-developer

environment:

- sa_password=Pass@word

- ACCEPT_EULA=Y

ports:

- "6433:1433"

networks:

default:

external:

name: nat

I have two services, my build image ci and the database test-db. Instead of copying the source from the host to the build image, I simply mount the current directory to C:\src. With this altogether, I can run the build inside my Docker environment like:

docker-compose -f ./docker-compose.ci.yml -p contosouniversitydotnetcore-ci up -d --build --remove-orphans --force-recreate

docker-compose -f ./docker-compose.ci.yml -p contosouniversitydotnetcore-ci run ci pwsh .\Build.ps1

With this in place (inside a single Powershell script to make my life easier), I can run this build locally, and, eventually, my build succeeds!

By using containers as my build environment, it means I have an absolutely controlled, deterministic, immutable build environment that I know won't fail based on some wonky environment settings.

In our next post, we'll look at ways of running this build setup in some sort of hosted build environment.