Containers - What Are They Good For? Build Environments?

In the last post, I looked at how containers could make local development easier for our typical projects, and mainly found they work well for dependencies.

Next up, I wanted to see if they could make our continuous integration server "better". Better, as in, faster, more reliable, more deterministic. But first, let's review our typical environment:

We have Continuous Integration servers running either on premise (with TFS, Jenkins, TeamCity) or in the cloud (VSTS, AppVeyor). We also run builds locally, as we're strong believers that you should be able to run your build locally before pushing your changes.

In either of our main options, on-prem or cloud, your build runs on a build agent. Most build servers also have the concepts of build queues/pools/requirements. In any case, when a build is triggered, the build server runs a build on a build agent.

Cloud build agents typically run your build in a purpose-built VM. AppVeyor and VSTS even have a few build images to choose from, but most importantly, these build images are pre-installed with a LOT of stuff:

This means that when our build agent starts up, we already have all of our "typical" build dependencies pre-installed, including popular services and databases. The hosted build servers cast a wide net with the software they install, as it's quicker to have this software pre-installed than it is to install it at build-time.

The other major advantage of course to this approach is your build is quicker if everything is pre-installed.

For on-prem build agents, you'll typically create a VM, install the build agent, and whatever software and services are needed to successfully run your build. Once this is installed, you don't need to re-do it. Some CI tools let you connect to Elastic Beanstalk with your own custom-built AMIs, giving you on-demand build agents. But this is still quite rare - most CI servers, for your own custom build agents, require those build agents to be running first, register, and only then will a build run on that agent.

Our starting point

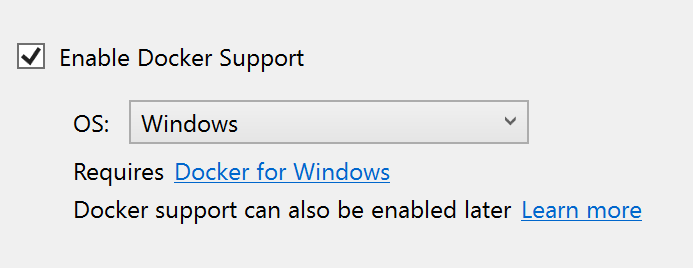

With all this in mind, what do we actually get out of the box when we create a new project with Docker support in Visual Studio? When you create a new ASP.NET Core project, one of the configuration items available for you is to enable Docker support:

But what does this actually do. It turns out, from a delivery pipeline perspective, next to nothing. The Docker support in Visual Studio provides runtime support, not build pipeline support. It gives you the ability to run your .NET Core application inside a container, but nothing more. It does not provide an actual CI build environment in the form of a container. We can see more by looking at the dockerfile:

FROM microsoft/aspnetcore:2.0-nanoserver-1709 AS base

WORKDIR /app

EXPOSE 80

FROM microsoft/aspnetcore-build:2.0-nanoserver-1709 AS build

WORKDIR /src

COPY AspNetCoreApp.sln ./

COPY AspNetCoreApp/AspNetCoreApp.csproj AspNetCoreApp/

RUN dotnet restore -nowarn:msb3202,nu1503

COPY . .

WORKDIR /src/AspNetCoreApp

RUN dotnet build -c Release -o /app

FROM build AS publish

RUN dotnet publish -c Release -o /app

FROM base AS final

WORKDIR /app

COPY --from=publish /app .

ENTRYPOINT ["dotnet", "AspNetCoreApp.dll"]

This multi-stage dockerfile uses two base images - first is the runtime image of microsoft/aspnetcore:2.0-nanoserver-1709, which since I chose Windows as my Docker image, uses the nanoserver-based image. The other base image, for actually building, is the microsoft/aspnetcore-build:2.0-nanoserver-1709 build image. It's also nanoserver-based, but the big difference between the two lies in what's actually included in the image. The ASP.NET Core "build" images base themselves first on the .NET Core SDK images, and add:

- Node.js

- npm

- gulp

- bower

- git

Plus a "warmed up" cache of NuGet packages by creating a dummy project and running dotnet restore on it. All of this put together gives us the ability, and really this is it, to run dotnet build commands on them. So while this is a great environment to compile and run our application, it's nowhere near adequate as an actual build environment.

Our build environment requires far, far more things than this. I also saw that the build script in our dockerfile only builds one single csproj, but our actual CI builds build the solution, not just a single project. You might want to build the solution file - but you can't, the .NET Core SDK build system does not support those special Docker build projects (yet). Whoops.

Compare with the VSTS build agent dockerfile which includes:

- Windows Server Core (not nanoserver)

- git

- node

- JDK v8

- curl

- maven

- gradle

- ant

- Docker

- 20 other things

Basically, it's the VM image of VSTS scripted out as a Docker image. Except, and this is important for us, any database images.

One other thing astute readers will notice is that the VSTS image uses Chocolatey, and the ASP.NET Core "build" images do not. There are a number of reasons why not, chiefly that the nanoserver image does NOT include:

- Any full .NET Framework of any kind

- Any version of Powershell

Chocolatey requires both, so we can't use basic tools to build our image.

I wouldn't let this stop me, so with all this in mind, let's create a dockerfile that can actually run our build.

A real CI Docker environment

Choices, choices. We need to decide what environment to build for our CI build, and how we compose our Lego docker bricks together. Our build requires a few dependencies:

- Powershell (any version, including Powershell Core)

- .NET Core SDK

- A SQL database to run our tests against

What I hope we don't need is:

- Full .NET

- Visual Studio

Immediately we're presented with a problem. In our "normal" CI environments, we use a number of tools that require full .NET, including:

- RoundhousE for database migrations

octo.exe for Octopus Deploy packaging/releasingthere is a .NET Core version of octo.exe (and *nix etc.)

We either need to find new tools, or build a bigger image that includes the tools needed to run.

The other big decision we need to make is - do we bake our service dependencies (SQL Server) into our CI image? Or do we use docker compose to create a CI "environment" that is two or more docker images networked together to actually run our build?

And finally, how can we reliably run this build locally, and in some sort of CI environment? What CI environments support running docker images, and what does that actually look like?

In the next post, I'll walk through migrating our existing build infrastructure to various forms of Docker builds, and show how each of them, on Windows, still are not viable.