Building Messaging Endpoints in Azure: Container Apps

Posts in this series:

- Evaluating the Landscape

- A Generic Host

- Azure WebJobs

- Azure Container Instances

- Azure Functions

- Azure Container Apps

Well it's been a while since we visited this! I intended to follow up with a post on Kubernetes but to be honest, Kubernetes is far too complicated to get all the pieces working for a blog post. I use k8s on my projects, but I think it's still far too complex. Managing a Kubernetes cluster is about as exciting as watching paint dry. It needs something on top of it to make it simpler.

Enter Azure Container Apps! An abstraction of Kubernetes that exposes just enough of its capability to use the things I typically care about. Yes, it's yet another way of hosting containers in Azure, but what I like about Container Apps is they provide the simplicity of App Service apps, but without all the baggage of assuming a web app.

Creating our message endpoint

Azure Container Apps just require a containerized app to deploy, so that's our first step. Similar to a Container Instance, we'll create a Worker app and add NServiceBus to the mix:

using ContainerAppReceiver;

using NServiceBus;

using NServiceBusExtensions;

var host = Host.CreateDefaultBuilder(args)

.ConfigureAppConfiguration(cfg => cfg.AddUserSecrets<SaySomethingHandler>())

.UseNServiceBus(hostContext =>

{

var endpointConfig = new EndpointConfiguration("NsbAzureHosting.ContainerAppReceiver");

var connectionString = hostContext.Configuration.GetConnectionString("AzureServiceBus");

endpointConfig.ConfigureEndpoint(connectionString);

endpointConfig.EnableInstallers();

return endpointConfig;

})

.Build();

await host.RunAsync();

Checking the box to add Docker support gives us our basic Docker container image:

#See https://aka.ms/containerfastmode to understand how Visual Studio uses this Dockerfile to build your images for faster debugging.

FROM mcr.microsoft.com/dotnet/runtime:6.0 AS base

WORKDIR /app

FROM mcr.microsoft.com/dotnet/sdk:6.0 AS build

WORKDIR /src

COPY ["ContainerAppReceiver/ContainerAppReceiver.csproj", "ContainerAppReceiver/"]

RUN dotnet restore "ContainerAppReceiver/ContainerAppReceiver.csproj"

COPY . .

WORKDIR "/src/ContainerAppReceiver"

RUN dotnet build "ContainerAppReceiver.csproj" -c Release -o /app/build

FROM build AS publish

RUN dotnet publish "ContainerAppReceiver.csproj" -c Release -o /app/publish

FROM base AS final

WORKDIR /app

COPY --from=publish /app/publish .

ENTRYPOINT ["dotnet", "ContainerAppReceiver.dll"]So far, there's not much new here, local development looks exactly the same. Our dummy message handler that's implementing a request/reply pattern is straightforward as well:

public class SaySomethingHandler

: IHandleMessages<SaySomethingCommand>

{

private readonly ILogger<SaySomethingHandler> _logger;

private static readonly Random _random

= new(Guid.NewGuid().GetHashCode());

public SaySomethingHandler(ILogger<SaySomethingHandler> logger)

{

_logger = logger;

}

public async Task Handle(

SaySomethingCommand message,

IMessageHandlerContext context)

{

_logger.LogInformation($"Received message: {message.Message}");

await Task.Delay(_random.Next(2000));

await context.Reply(new SaySomethingResponse

{

Message = message.Message + " back at ya!"

});

}

}

The only thing different here is that I'm adding some random delays to simulate communicating with some expensive external resource (say, Azure SQL).

Where it gets interesting is deploying our app as a Container App.

Publishing our Container App

Because I'm lazy, I just right-click the project and select "Publish", which will create a publish profile for our application. I select Azure as the target:

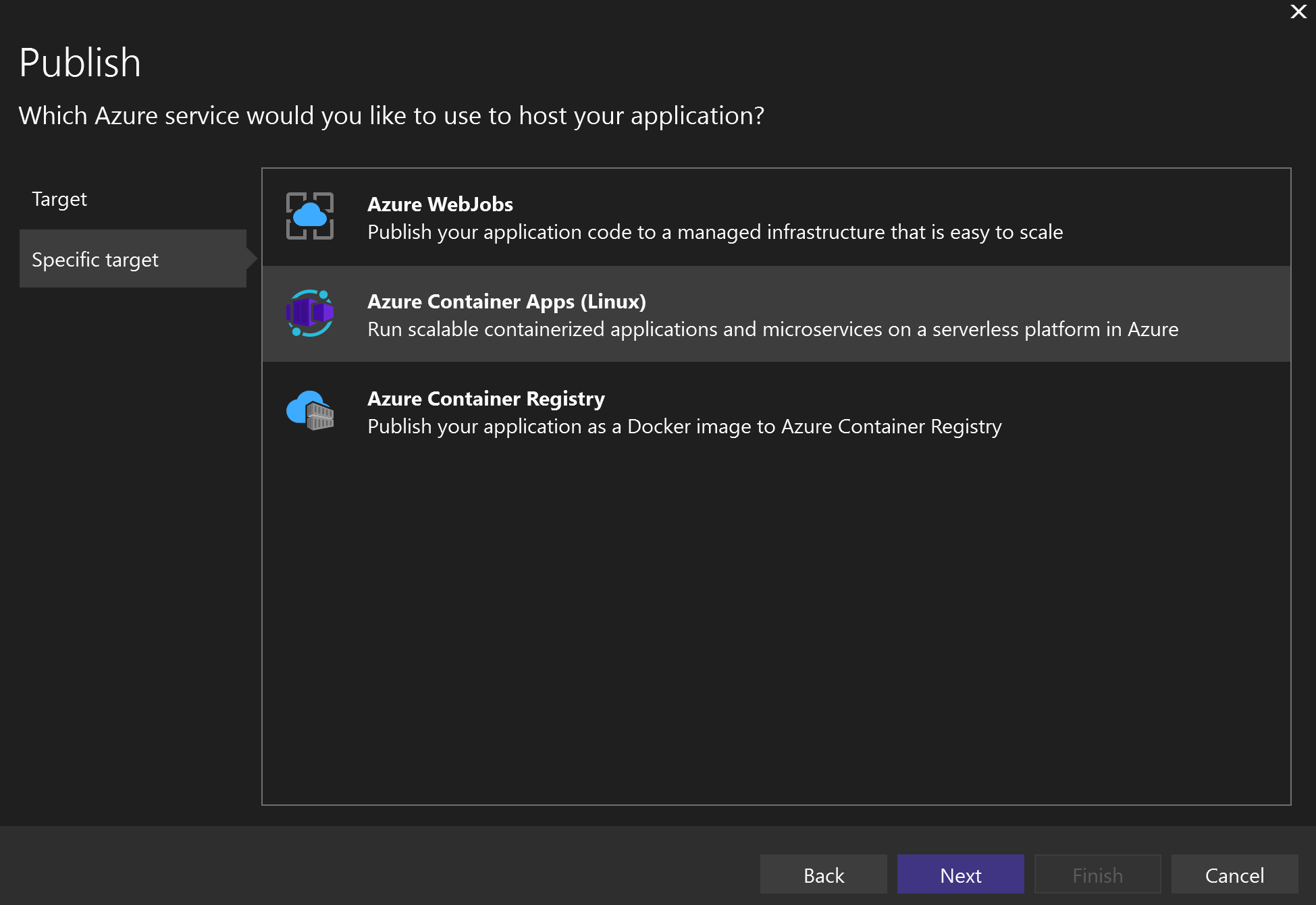

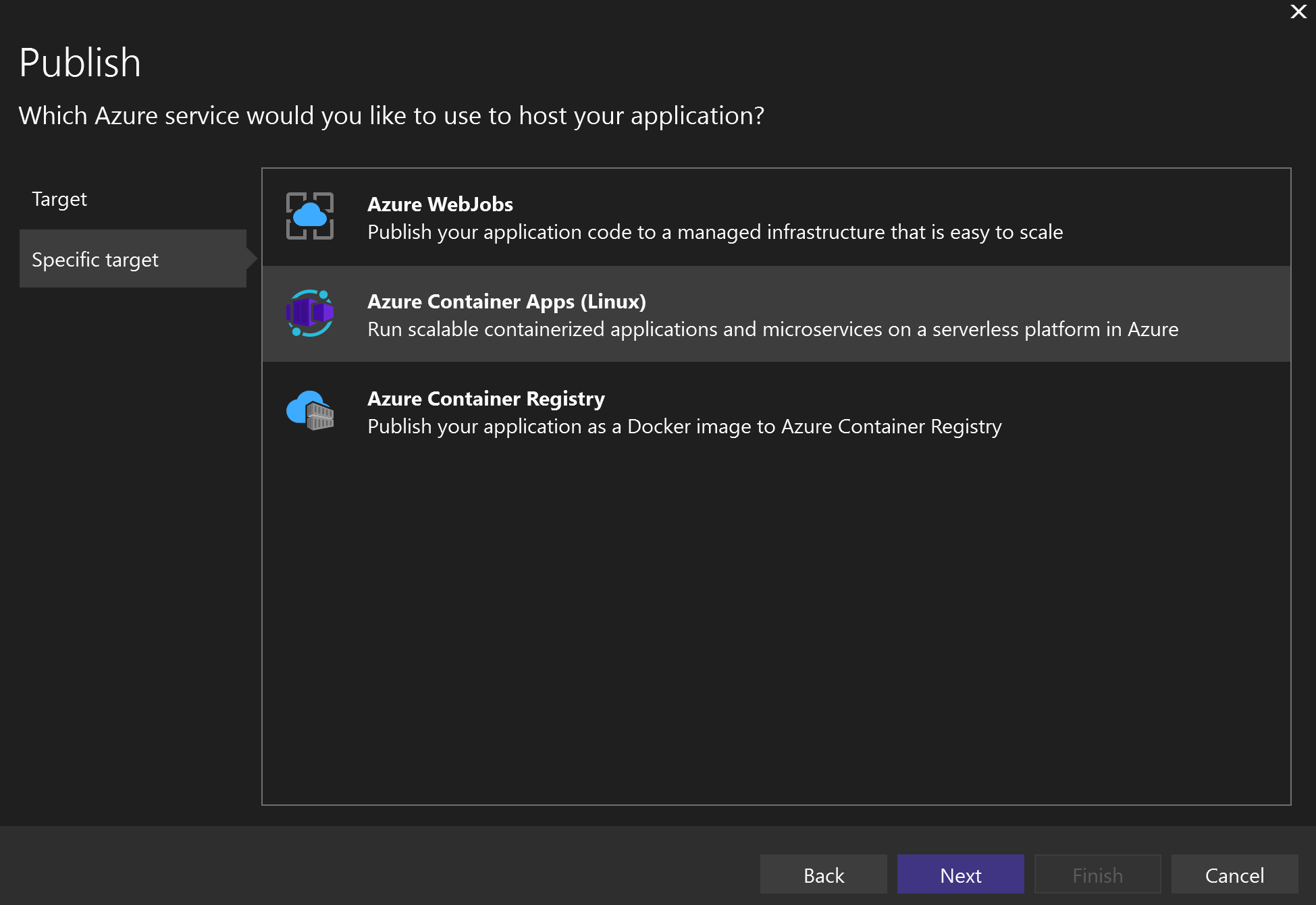

Then select Azure Container Apps (Linux):

I then create a new Azure Container App:

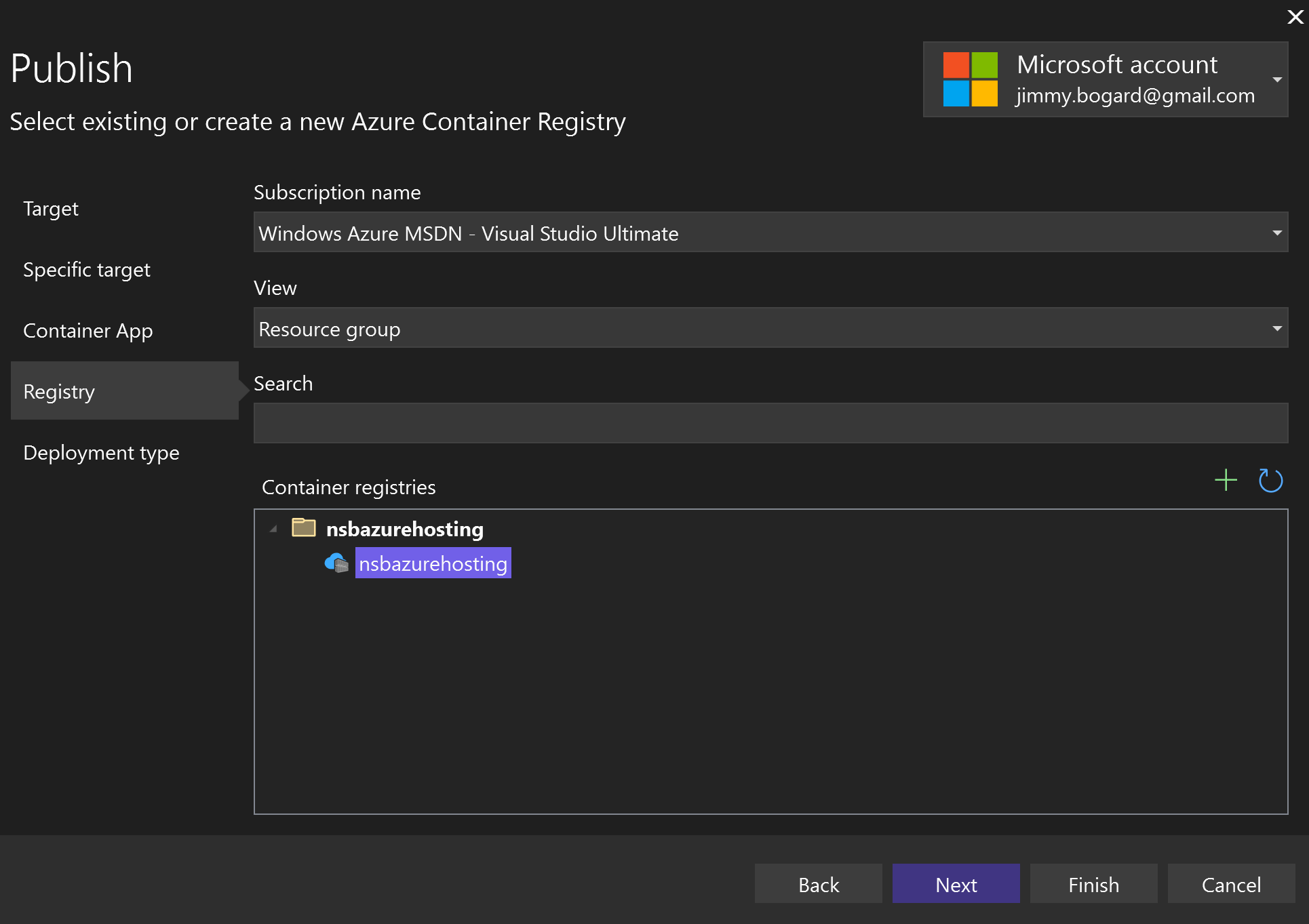

Finally, I select the existing Container registry I had created for my other container apps:

With this in place, I can publish my app to Azure. Once published, there are still a few things I need to set up in my Azure configuration.

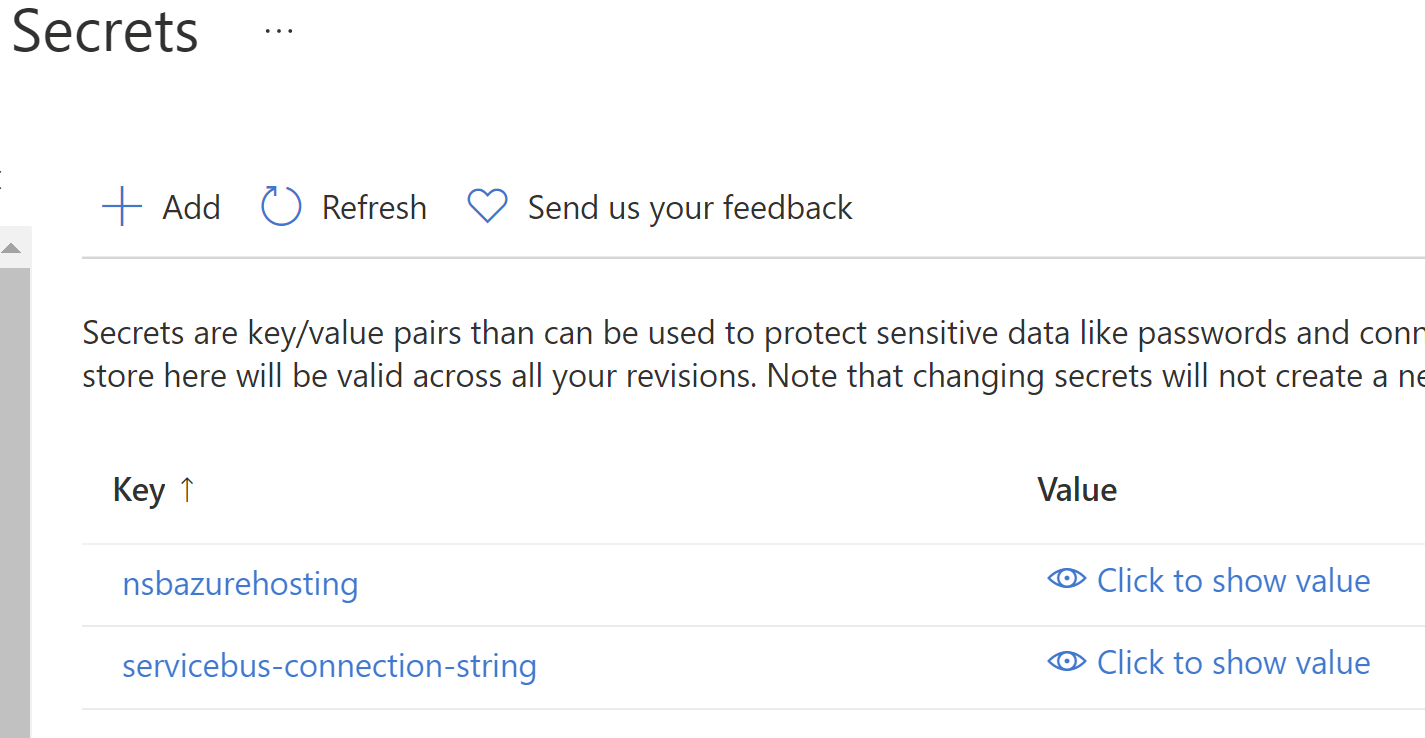

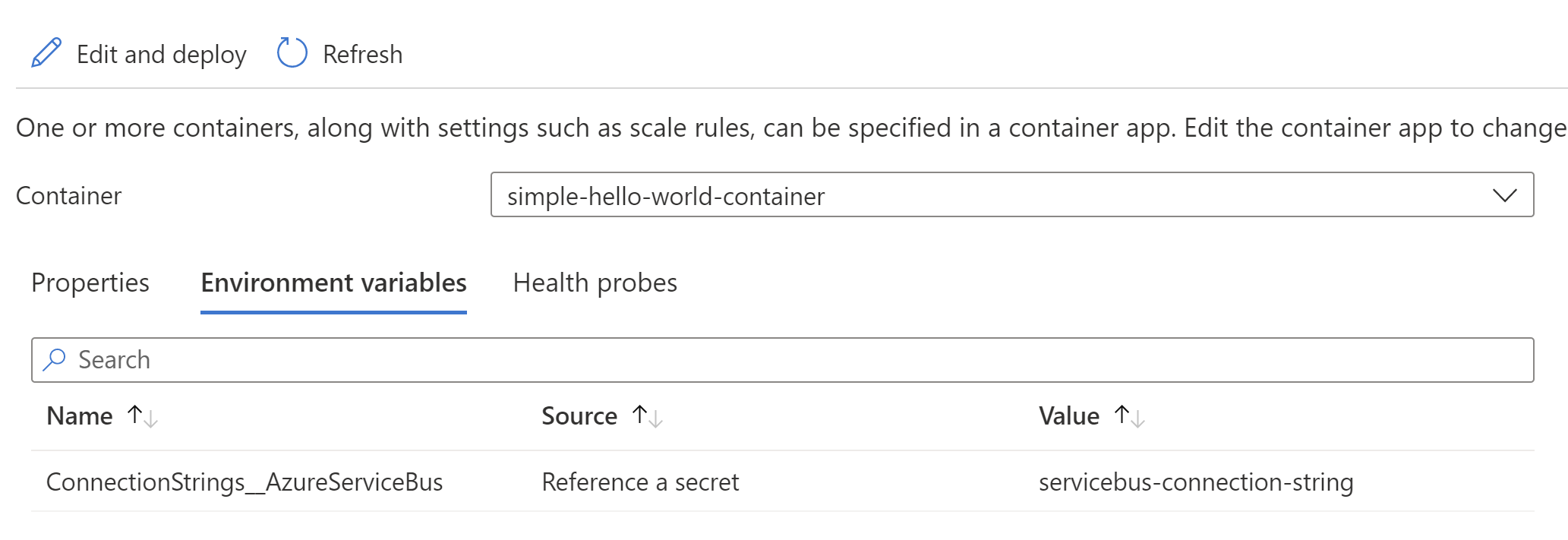

First, I need to configure my Azure Service Bus connection string. I've configured my app to look for a connection string named "AzureServiceBus". This configuration value can come from a few different places, but an easy way is to configure a secret for my Container App. I'll create a secret for my connection string:

Next, we need to configure an environment variable for our container to reference this secret:

The name is important here, we need to make sure the environment variable configuration source correctly picks up our connection string value. If I were doing this with raw Helm charts, I'd use Kubernetes secrets probably pointing to Key Vault.

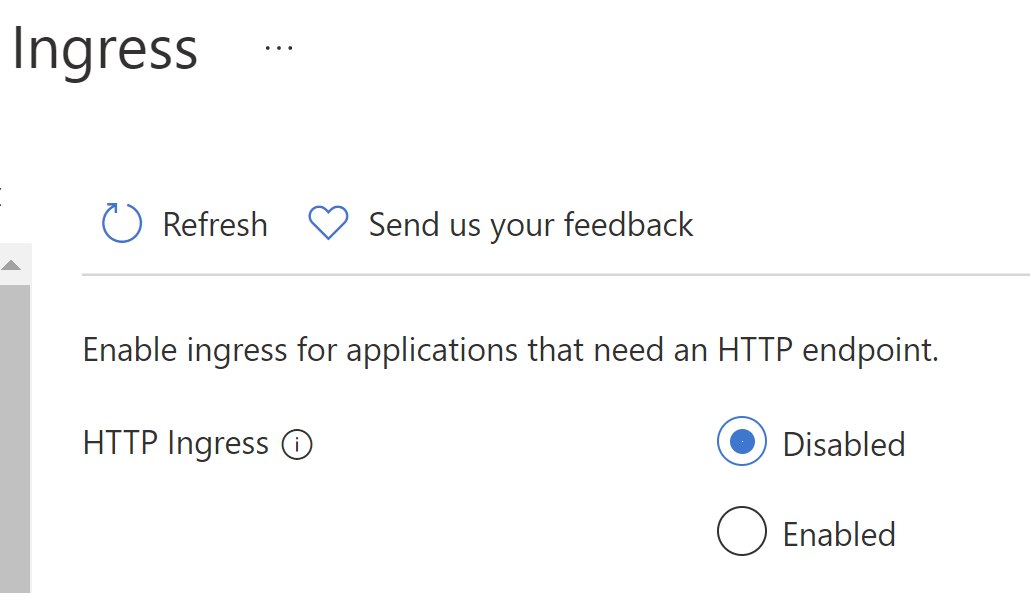

One final piece is to remove any ingress for our Container App. We don't want to accept any incoming HTTP traffic, so let's turn that off:

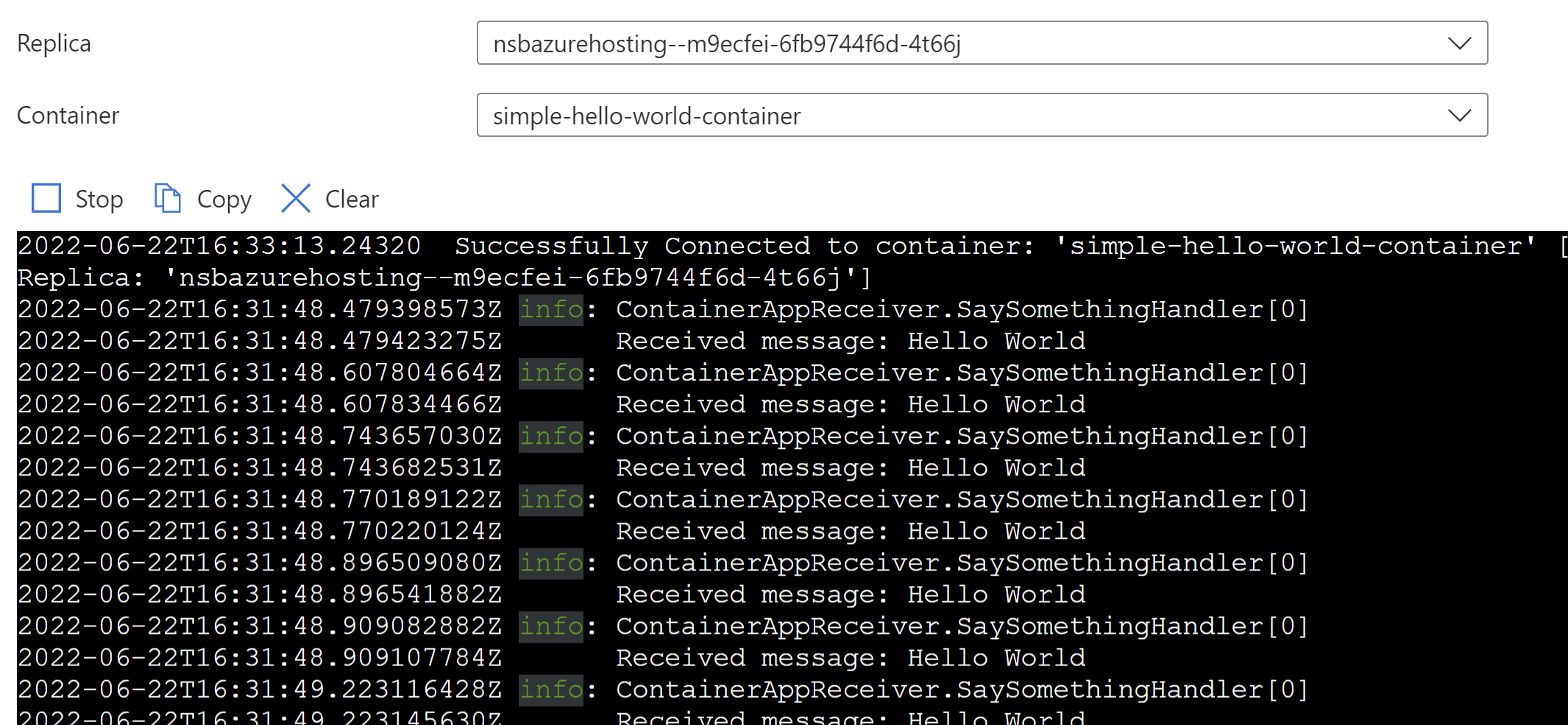

Once that's in place, we can run our container app and see it accept messages! I'll send messages via a locally running app, and see the messages handled in my Container App:

Then I can see the replies in my locally running app (still pulling from Azure Service Bus):

info: Sender.Handlers.SaySomethingContainerAppResponseHandler[0]

Received response Hello World back at ya!

info: Sender.Handlers.SaySomethingContainerAppResponseHandler[0]

Received response Hello World back at ya!

info: Sender.Handlers.SaySomethingContainerAppResponseHandler[0]

Received response Hello World back at ya!

All in all, pretty straightforward! But right now, I've just got a single container instance running. What if I receive a barrage of messages and want to scale up (or down)?

Auto-scaling my Container App

Since Container Apps are powered by Kubernetes, I can use any KEDA-supported event to support event-triggered scaling. This includes using an Azure Service Bus trigger. An Azure Service Bus KEDA trigger can scale based on the number of messages in a:

- Topic/Subscription

- Queue

In NServiceBus, messages are ALWAYS consumed from an Azure Service Bus queue, not from a Subscription. Our KEDA rules would typically be defined in our Container App manifest, but again because I'm lazy, I can configure this directly in the Azure portal:

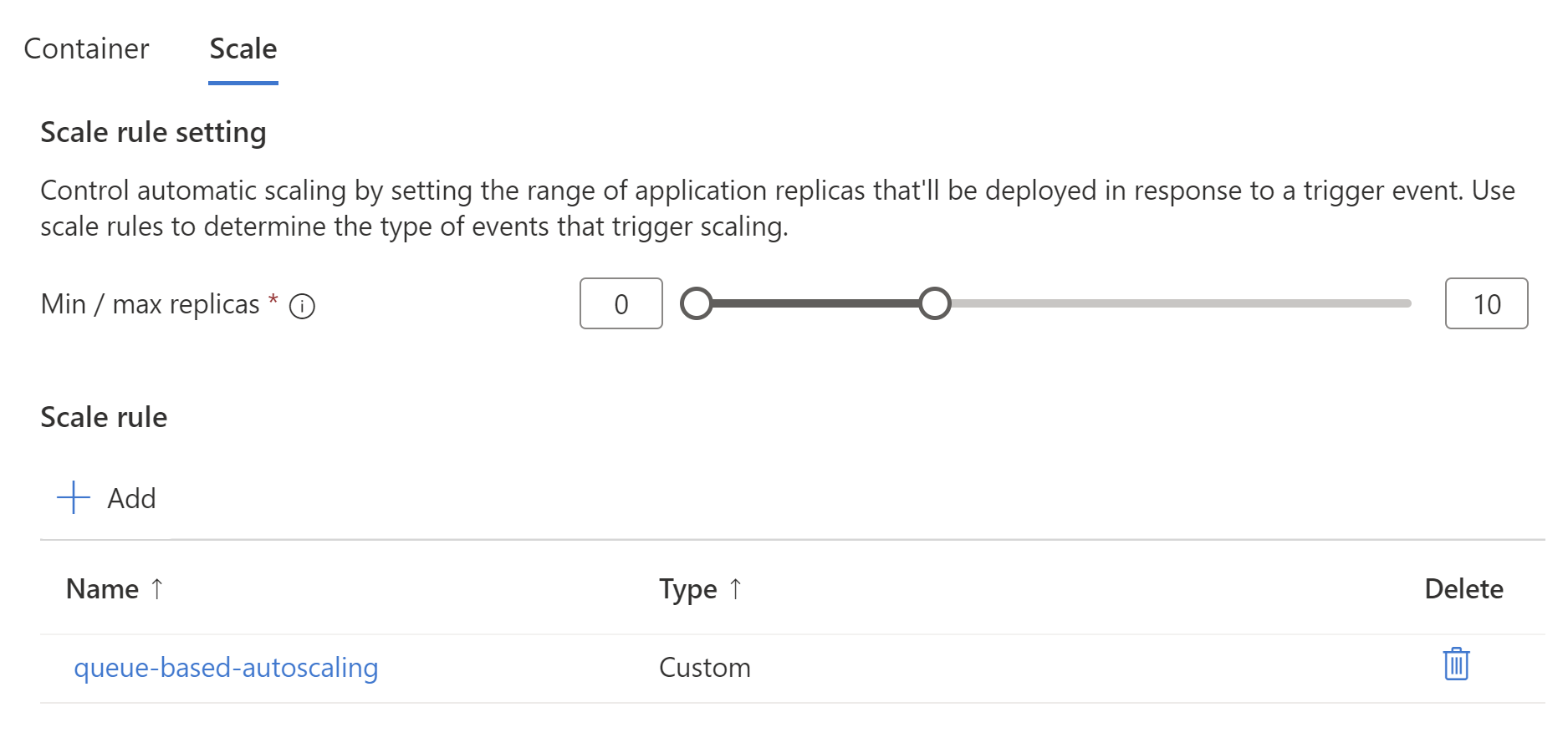

I set a min/max number of replicas, in my case 0 to 10. I might choose at least 1 replica in a production scenario but for some messaging scenarios, we're OK with a cold start of starting my container image because we're already processing messages asynchronously.

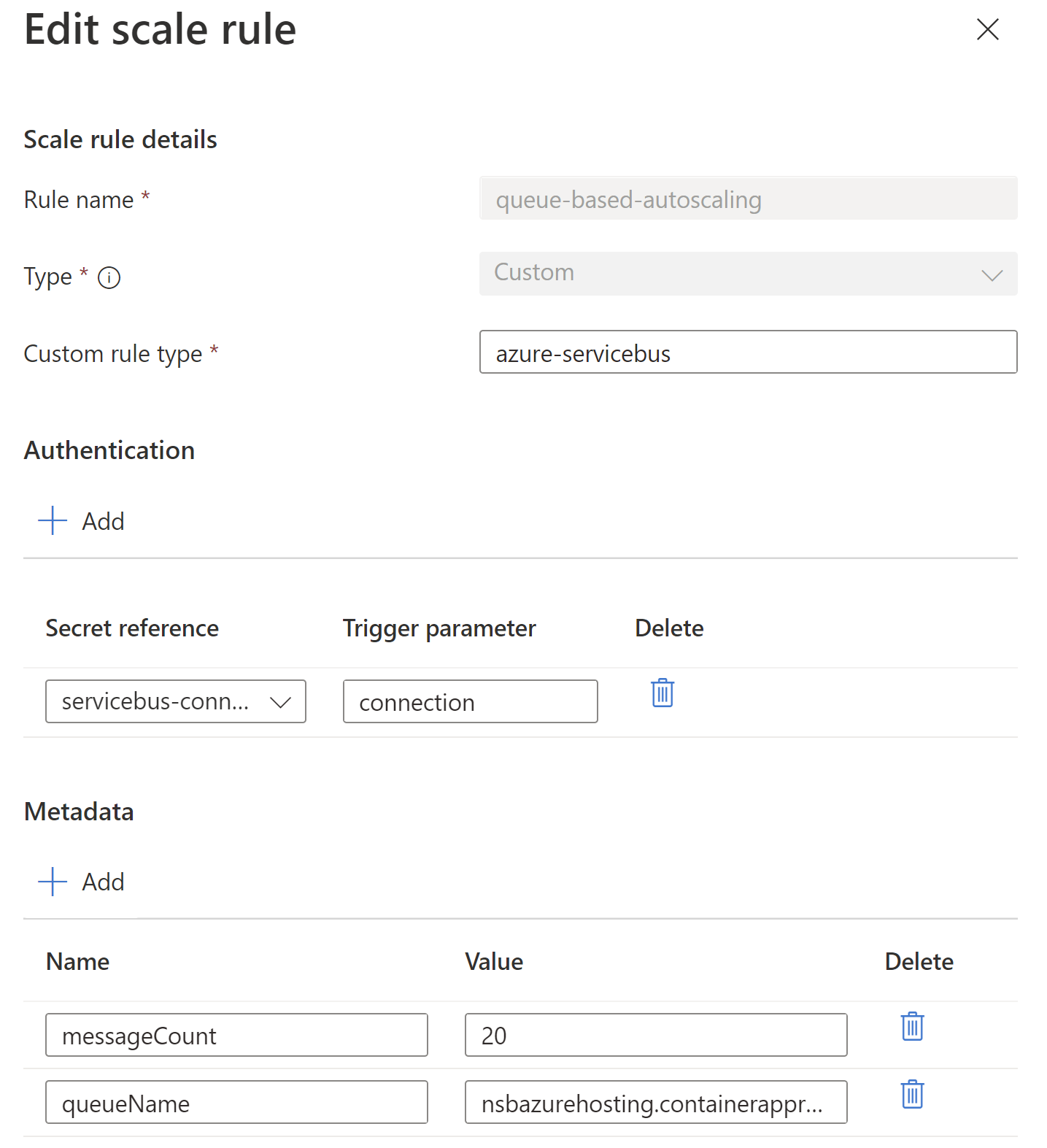

Next, I create a custom scale rule using KEDA configuration:

The custom rule type is the KEDA name of the trigger. I need to allow Kubernetes to connect to Azure Service Bus, so that's the connection from the secret to the trigger parameter. The other metadata values line up to the KEDA Azure Service Bus configuration values - the number of messages to trigger scaling up, and what queue to monitor.

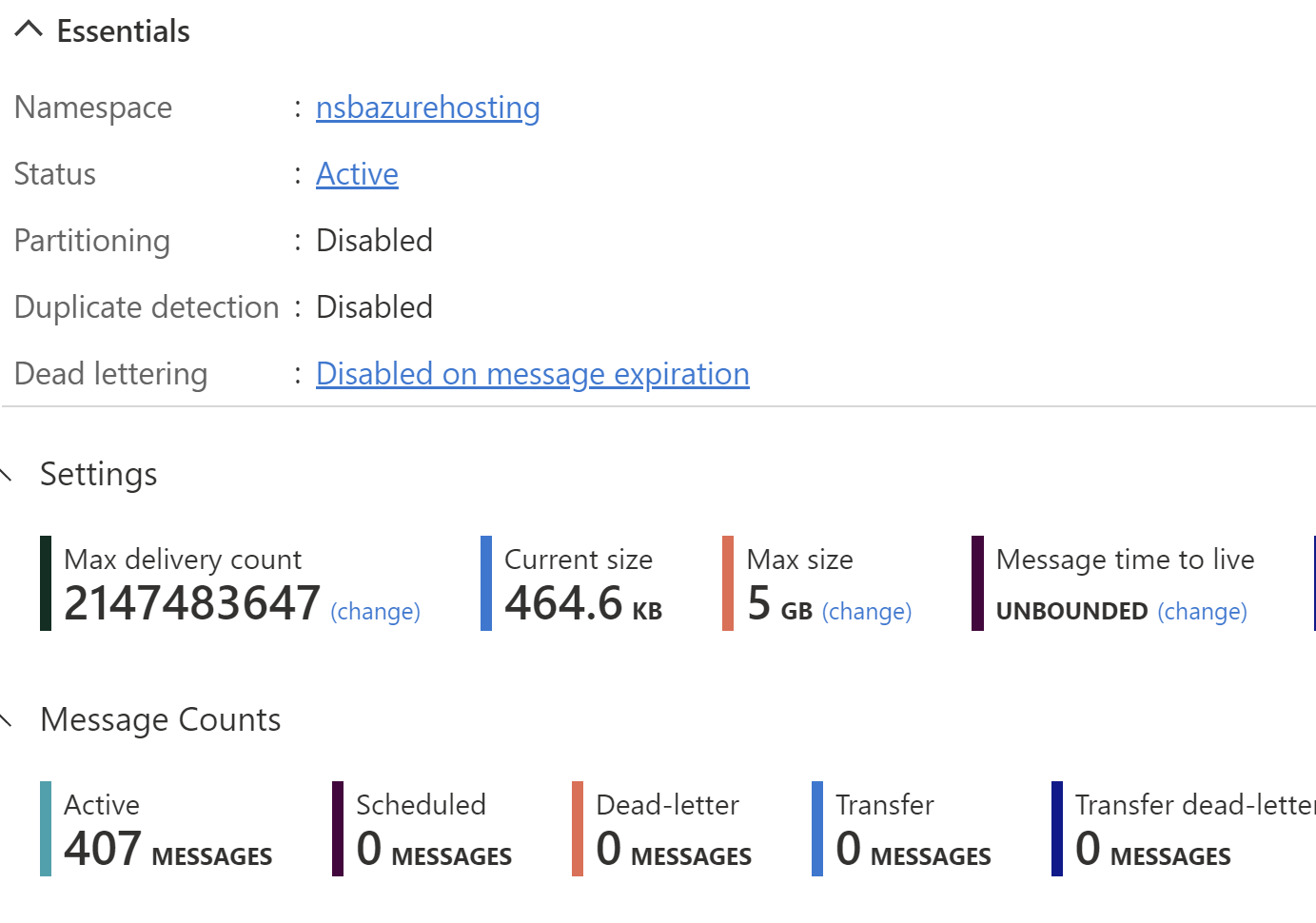

With this in place, I can dump a ton of messages in my Container App's queue:

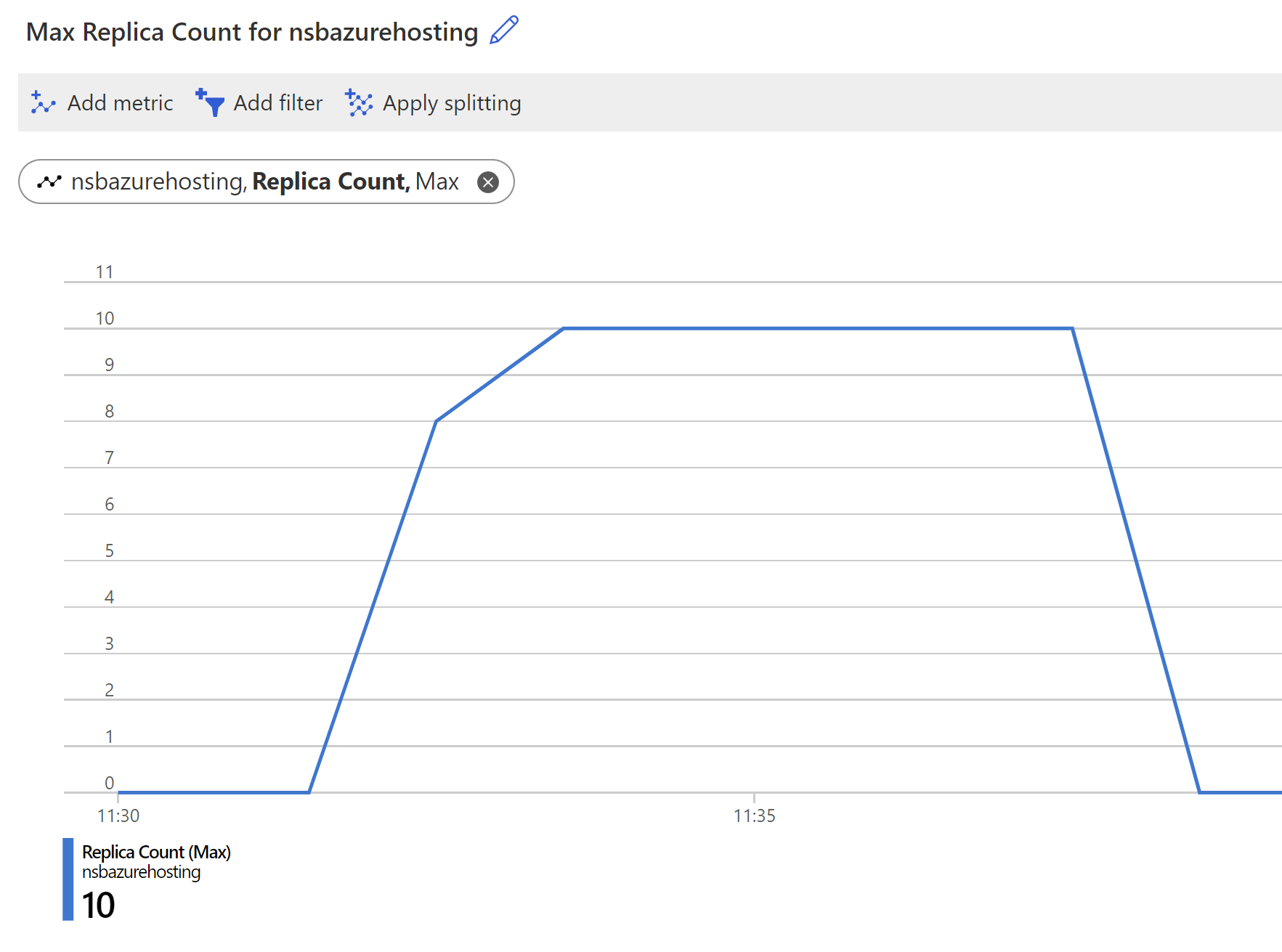

And watch my Container Apps instances scale up to be able to handle this new load:

As messages pile up, my container instances scale from none to ten, then back down to zero once all the messages are processed. Having set up a similar configuration in KEDA manually, this approach is so much easier.

There's still a lot to explore in the Container Apps space, namely its security and networking capabilities. I also didn't touch the health probes for liveness, readiness, or startup, but those are quite different for message endpoints since we're not accepting HTTP traffic.

The Container Apps resource strikes a nice balance between bare-metal Kubernetes and completely abstracted PaaS. At this point, I'd say Container Apps would be my go-to choice for deploying message endpoints to Azure.